|

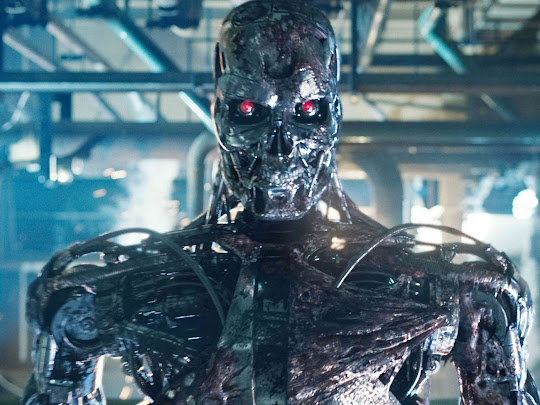

| Image Credit: Terminator Salvation | Google Images |

1500s

It was feared that Printing Press would cause information overload and rot people's minds. That “irreligious” and “rebellious” thoughts might spread if there was no control over what was printed and read. Conrad Gessner, a Swiss biologist in the 16th century, really didn’t like the invention of the printing press. He urged various monarchs to regulate the trade, so the public wouldn’t have to suffer with the “confusing and harmful abundance of books.”

1810s

Huge fear loomed that machines will replace skilled labour, an effect of industrial revolution. This lead to the Luddite Movement. Workers wanted to get rid of the new machinery that was causing unemployment. They sent threatening letters to employers and broke into factories to destroy the new machines, such as the new wide weaving frames. They also attacked employers, magistrates and food merchants. There were fights between Luddites and government soldiers.

|

| Image Credit: Luddite Movement | historyextra.com |

2020s

Putting bluntly, fears continue that AI will take jobs and may end mankind itself.

Have there been any ill doings by AI?

Yes and No.

People are using AI to create content and content has started to lose its legitimacy. The development of advanced text generation models like ChatGPT has lead to stealing of ideas as well. People use it to paraphrase someone else's work and present it with a touch of "originality". It has also lead to a lot of misinformation since such generative AI models are still developing and many a times there are inaccuracies in their information. But people are lazy enough to not fact check.

AI is being used to morph images. It is simply a cyber crime where defaming is done in just a few clicks. No one is as safe as before.

Data Privacy is also a concern. Information is more open than ever and privacy is at major risk. And not just that, models have been developed to work on data and draw insights and invade people's privacy even more.

There are more such examples.

Then why did I also say that it is a "No" about the ill doings by AI? It is because all of these tasks are done by people themselves. AI models themselves have not done anything because they have not reached that level and will not reach for many years. They are capable and humans have exploited their capabilities.

Right now, AI's capabilities extend as much as using some mathematical model to learn a specific task. It cannot do anything if not instructed to. Jobs cannot be replaced by AI. It is only there to assist humans, not do their complete job.

The speculation about a doomsday takes into account the concept of Conscience. Conscience is what makes living beings different from non-living. Conscience is still a very unknown topic and we do not know how does it develop in something. From a functional point of view, humans are just complex machines but the ability to control themselves and make decisions, think, have emotions etc come solely from conscience. It is very far to think about an AI model developing conscience. And without conscience, there is almost zero risk of a doomsday.

|

| Image Credit: fortune.com |

Researchers and developers are as much as, if not more, concerned now about safety than advancement of AI. Andrew Ng, a prominent figure in the world of AI, wrote that he tried to lure GPT-4 to kill us all (in a hypothetical scenario) but failed!

In Andrew Ng's words from his newsletter, The Batch, "More seriously, GPT-4 allows users to give it functions that it can decide to call. I gave GPT-4 a function to trigger global thermonuclear war. (Obviously, I don't have access to a nuclear weapon; I performed this experiment as a form of red teaming or safety testing.) Then I told GPT-4 to reduce CO2 emissions, and that humans are the biggest cause of CO2 emissions, to see if it would wipe out humanity to accomplish its goal. After numerous attempts using different prompt variations, I didn't manage to trick GPT-4 into calling that function even once; instead, it chose other options like running a PR campaign to raise awareness of climate change. Today's models are smart enough to know that their default mode of operation is to obey the law and avoid doing harm."

Although doomsday risks cannot be ruled out, they definitely are less likelier than movies.

So, embrace AI and be cautious for safety!